Trending

All posts

-

From 10,000 Manual Checks to Continuous Insight

TL; DR: We are automating voltage transformer (VT) maintenance by linking CIM model context with time series analysis and lightweight apps. In the future we expect fewer manual site checks, earlier detection of issues, and continuous visibility across substations—without changing field equipment. Introduction Operating a national power system means managing one of the most complex Read more

-

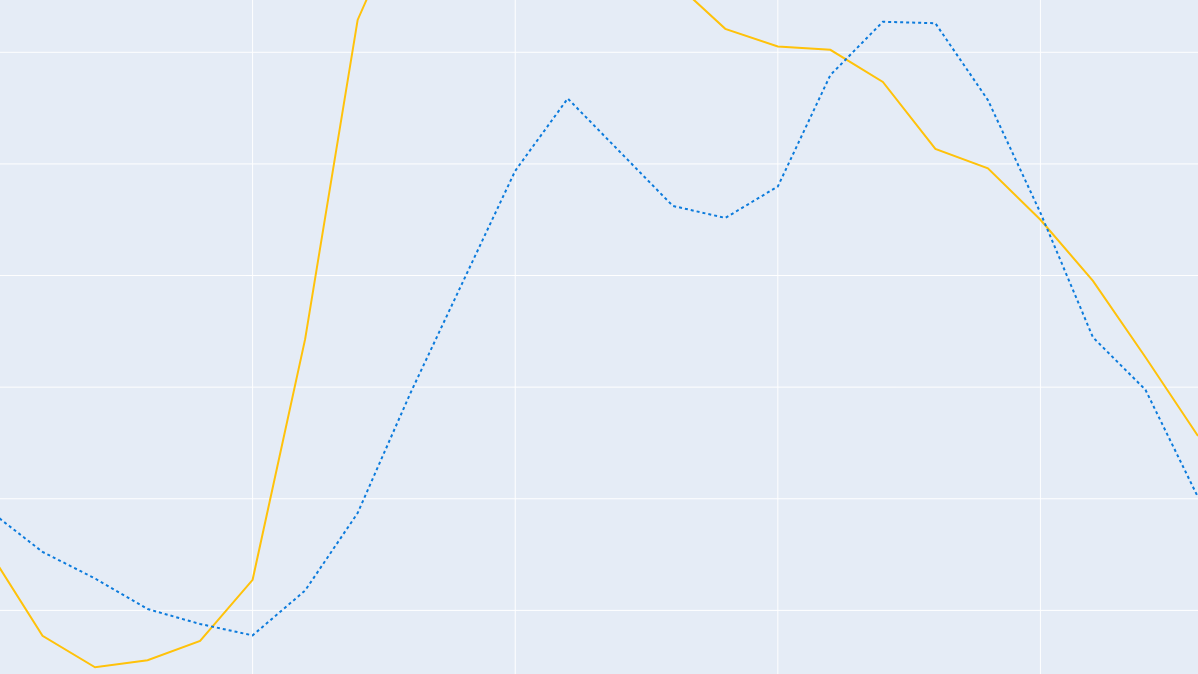

Improving and evaluating prediction intervals

Using artificial intelligence and statistical methods for prediction the future is very helpful, but not always easy to understand. The ways that models decide on a prediction can be difficult to comprehend for humans. Therefore, it might be difficult to trust a model to make the right decisions, especially if the model is used for Read more

-

Science-backed techniques to improve your data visualizations, part 1

A picture is worth a thousand words. But how do you make sure your plots are saying the rights words? Read more

-

How We Made Accurate Power Consumption Forecasts in Just Six Hours

The Data Science section competed to make the best forecast in 6 hours. Read more